Here I will share my current workflow for low-level optimization, which these days is basically a roundtrip between Delphi, SamplingProfiler, ChatGPT and Godbolt.

Here I will share my current workflow for low-level optimization, which these days is basically a roundtrip between Delphi, SamplingProfiler, ChatGPT and Godbolt.

This can allow you to produce code that runs faster than any single C compiler, while staying with Delphi code.

OpenAI’s ChatGPT took the world by storm in December 2022. While for some uses you can quickly reach the limits, there is one domain where it is unmatched: universal translation.

OpenAI’s ChatGPT took the world by storm in December 2022. While for some uses you can quickly reach the limits, there is one domain where it is unmatched: universal translation.

And that universal translation encompasses natural language to code… and translating between Delphi and C.

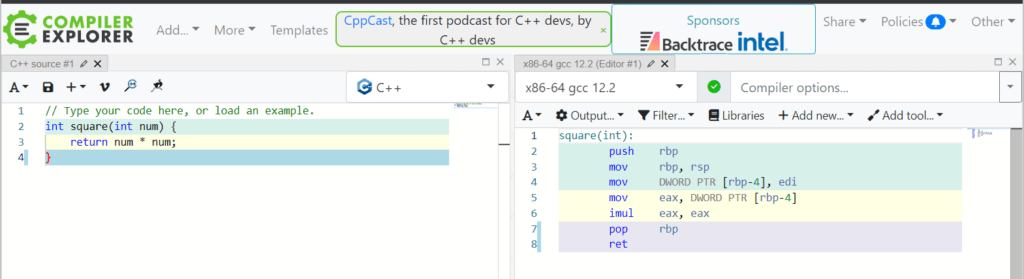

And we get to Godbolt, which is an online service that allows to compile a snippet of C code with a wide variety of C compilers, and provides the low-level assembly output.

Optimization workflow

My optimization workflow starts from Delphi code and a performance diagnostic with SamplingProfiler. Typically that will pinpoint a particular hotspot in code.

The first action is to take a look at that code, and find out which algorithm and data-structures are used.

The fastest computing is the one you don’t do, so then comes a first round of asking ChatGPT for alternatives. If you do not know the academic name of the algorithm being used use, ChatGPT can usually provide it in the first analysis.

While ChatGPT can help, this is a point that requires some expertise. Non-trivial algorithms usually come with non-trivial terminology and concepts… Also this is a phase where ChatGPT can hallucinate a whole lot.

So I always back up ChatGPT suggestions with a web search or Wikipedia.

Low-level optimization with C compilers

Assuming the algorithm is already the best (or cannot be changed), it is time for low-level optimization!

There I just copy-paste the Delphi function prepended with “Convert to C” in ChatGPT. Unless the data-structures are uncommon or have weird field names, the LLM can usually figure those itself.

The next step is to copy-paste the result into Godbolt, fixe the compile issues if any, and start stressing the various C compilers.

The top C compilers I have found helpful are:

- clang which usually does a good job

- icx which sometimes does an excellent job, sometimes not

- gcc which is decent, but rarely excellent

Other compilers are either producing code very close to those three, or have little advantage over the Delphi compiler (like msvc).

My typical compiler options are “-O3 -msse4.1 –fast-math –target=x86_64-w64-windows-gnu” so that the produced assembly is (almost) directly usable in a Delphi asm block (more on that later).

clang and gcc have very comparable outputs, but clang often has an extra bit of performance and flair IME.

That said, it’s all very contextual, and you really, really need to measure and benchmark.

Vectorization is not a Panacea

Many times the C compiler will produce very efficient vectorized code. For instance on a convolution kernel with Complex numbers, I saw clang do an excellent job. It reused and shuffled registers, maximizing SIMD, in a way impossible to hand-optimize without spending a PhD.

But other times, in seemingly trivial loops like a dot product, the output was quite “meh”, falling way behind a first attempt in hand-written assembly.

Another class of problems clang & gcc do not handle that well are when the processing is memory-limited rather than compute-limited. I have encountered multiple cases where they tried so hard to vectorize that they ended up behind the Delphi compiler simpler approach.

One such case was when down-sampling by a factor two a grayscale single-precision matrix: clang ended up 20% slower than the simple Delphi loop. This is a problem where vectorization opportunities are very limited, and come at a high cost of register shuffling. Interestingly enough icx did not fall in that trap, it did not attempt to vectorize, and ended up at the same performance level as the Delphi 64 output (albeit with a larger code).

Intel’s ICX compiler

icx is special. It is Intel’s next gen compiler based on clang. But it is a clang tweaked to have different threshold on loop unrolling and vectorization. However it has a cargo-cult for branchless code, and will go to extreme lengths to avoid branches and jumps. This means that sometimes the result is, well, dreadful.

icx is special. It is Intel’s next gen compiler based on clang. But it is a clang tweaked to have different threshold on loop unrolling and vectorization. However it has a cargo-cult for branchless code, and will go to extreme lengths to avoid branches and jumps. This means that sometimes the result is, well, dreadful.

But in other instances, it is brilliant.

I have in mind a particular dilation kernel with a sliding window when using a computational median (yeah, it’s a quite specific problem). icx output benchmarked 4 times faster than all other compilers! It achieved that by minimizing memory accesses and vectorizing over two loop steps.

That said, on that same kernel when using comparison-based median, its output was twice slower than Delphi 64bit compiler, which itself was 20% faster than clang…

The reason ? Delphi uses jumps and branches with abandon. The dataset was very “blobby”, and thus friendly to branch prediction. icx‘s output with all its branchless code ended up (efficiently) performing an awful lot more work, where the Delphi code just skipped over. clang‘s code was halfway, having factorized some (not all) of the branches. It was not suffering as bad as icx, but it was suffering nonetheless.

It is highly likely that on a different more random dataset, icx’s output would come on top, but hey, that’s not the problem the code was dealing with. So again, benchmarking is key.

Back to Delphi

Once you have your asm code, time to port it back into Delphi, this usually can be done with just a few “search and replace”:

- replace “#” with “//”

- replace “.L” with “@@L”

- replace “xmmword ptr” with an empty string

If there are constants, move them at the end of the asm block after an “.ALIGN 16” and adapt the type declarations (“.byte” with “db”, “.long” with “dd”, etc.).

Another directive you may need is “.NOFRAME”, place it just at the beginning of the asm block to tell the Delphi compiler the asm code already handles the stack frame. When SIMD registers are saved there, you may need to replace the “movaps” with “movups” as the Delphi stack is not highly aligned (note that alignment is usuallyirrelevant on modern CPUs).

Converting the assembly with ChatGTP is too risky IME. It often messes up a register reference or two. So don’t bother, especially since the few search & replace are enough.

This allows to get the best of all top C compilers low-level optimization in Delphi code, when they are on top, because none of them always come on top, and the difference on particular routines can range from 20% to 400%!

You can then have code that is faster than Delphi, faster than clang, faster than icx and faster than gcc. 😁

As a bonus, the asm section in Delphi are properly documented in map files and TD32, meaning the exact hotpots will be qualified when running SamplingProfiler, so you go for another round 😉

Great write up!

Since clang and gcc are open source, did you consider informing them of the observed bottlenecks/disadvantages they have?

Documenting this with a few examples may allow them to add optimizer passes for these cases.

I alas do not really have the time for properly reporting, as this would involve not just reporting a small function, but also a benchmark and a dataset, along with an alternative implementation in asm to show the differences (because just providing Delphi code wouldn’t help them).