A compiled script, a TdwsProgram, cannot be saved to a file, and will not ever be. Why is that?

This is a question that dates back to the first DWS, as it was re-asked recently, I will expose the rationale here.

- DWS has a very fast compiler, there are no performance problems compiling scripts instead of loading a binary representation that has to be de-serialized. How fast is it? See below.

- DWS lets you define custom filters, that enable you to encrypt your scripts easily, if hiding the script source is what you were after with the bytecode.

- DWS compiler/parser portion is quite light (currently less than 75kB), especially compared to the size of the Delphi libraries you will be using for the runtime. You probably will not notice it in the EXE size once you expose more than a few trivial libraries.

- Last but not least, when loading a binary representation of a script, you have to make sure all libraries are compiled into the application that loads and wants to execute the script, and that they are entirely backward-compatible with what was exposed to the script back when it was compiled. That is irrelevant when re-compiling.

How fast is the DWS compiler?

I did some quick benchmarking against PascalScript and Delphi itself.

I generated a script based on the following template:

var myvar : Integer;

begin

myVar:=2*myvar-StrToInt(IntToStr(myvar));

end;

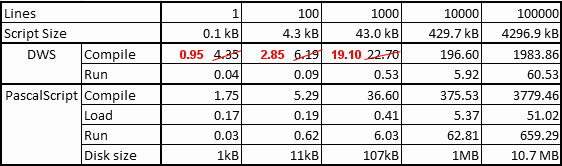

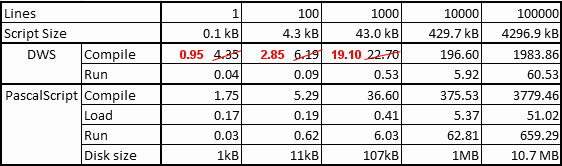

The assignment line being there only once, 100 times, 1000 times, etc. The result was saved to a file, and the benchmark consisted in loading the file, compiling and then running it for DWS. For PascalScript, the times are broken down into compiling, loading the bytecode output from a file, and then running that bytecode. Disk size indicates the size of the generated bytecode.

All times are in milliseconds (and have been updated, see Post-Scriptum below):

For line counts expected for typical scripts (less than 1000), compared to PascalScript, the cost of not being able to save to a bytecode is a one-time hit in the sub-15 milliseconds range, on the first run.

This illustrates why it is not really worth the trouble maintaining a bytecode version for scripting purposes, and that is also my practical experience.

For larger scripts, it is expected the execution complexity will dwarf the compile time: the benchmark code tested here doesn’t have any loops, anything more real-life will have loops, and will likely have a greater runtime/compiletime ratio.

What of Delphi?

For reference, I tried compiling the larger line counts versions with Delphi XE, from the IDE.

- the 100k lines case took 3 minutes 27 seconds to compile (ouch!), obviously hitting some Delphi parser or compiler limitation. Runtime was 63 ms.

- the 10k lines case in Delphi compiled in a more reasonable 2400 msec, and ran in 4 ms (50% faster than DWS).

What else? The DWS compiler has an initial setup cost higher than PascalScript, but as code size grows, it starts pulling ahead. That setup overhead will nevertheless bear some investigation 😉.

Once compiled, the 10x execution speed ratio advantage of DWS vs PascalScript is consistent with other informal benchmarks.

Post-Scriptum

Gave a quick look at the setup overhead with SamplingProfiler, and found two bottlenecks/bugs. The outcome was the shaving off of 3 ms from the DWS compile times, ie. the compile times for the 1, 100 and 1000 lines cases are now 0.95 ms, 2.85 ms and 19.1 ms respectively.

With XE2 now

With XE2 now