There will come a time when SamplingProfiler may report you that begin or end are your bottlenecks. This may sound a little surprising, but it’s actually quite a common occurrence, and something that instrumenting profilers are not going to point out, so it might be worth a little explanation.

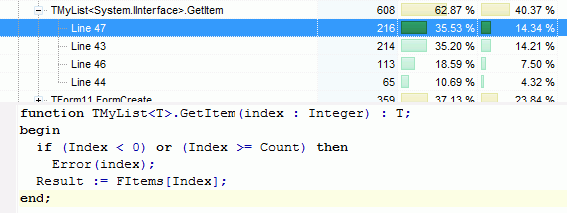

This can be illustrated it with the minimalistic example of an array property getter. Witness the innocuous looking code below:

function TMyList.GetItem(index : Integer) : T;

begin

if (index < 0) or (index >= Count) then

Error(index);

Result := FItems[index];

end;

Nothing out of the ordinary there, you can find similar looking code in practically every array-based collection in the RTL and many third party libraries. But someday, that GetItem will be bottleneck, and you could be left looking at code profiling results like those:

Yes, those are the are the begin and end lines taking up more than 70% of the CPU time spent inside GetItem…

You knew it! Sampling profilers are unreliable… or are they? Surely the index range checking must be the culprit? or the assignment and the reference counting business? Well, they could be, but in this case they aren’t.

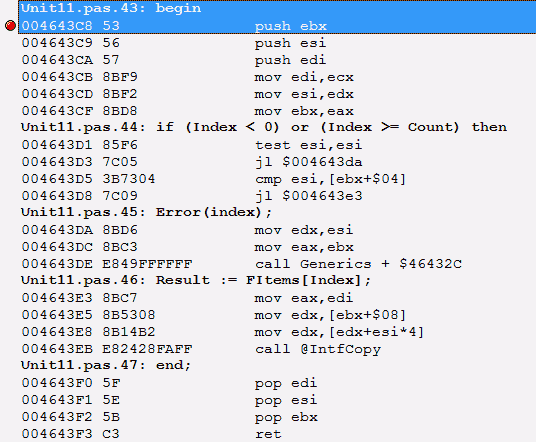

To understand why, let’s have a look in the CPU view. Place a breakpoint on your begin, run up to there and hit Ctr+Alt+C, here is what you could see:

That’s a whole lot of traffic to the stack: 3 registers saved, 3 copies. Those things aren’t free, they can dwarf what your explicit code does, and in this example, they do. We didn’t even have any local variables, if we did, they would have taken setup and teardown code, and this code would have been “hidden” in begin and end too.

This illustrates a difference of sampling vs instrumenting profilers: the ability to pinpoint an actual bottleneck, even if it is “outside” of your explicit code, so you can find where the actual bottleneck is, and don’t waste time trying to optimize what isn’t critical.

Now what can you do to improve things locally? With generics, an interface type and Delphi 2009 sp2, nothing much, short of going BASM. The bottleneck code is compiler-generated, optimizing the assignment or the range checking would only provide minimal benefits. If you want to go faster, you’ll have to reduce the number of calls to GetItem, ie. open that “Show Callers” pane, have a look there, and solve the issue at the higher-level routines that are involved.

But there are other situations in which you can influence the auto-generated begin/end code, the solutions then typically revolve around distributing the code across smaller local functions or methods, tweaking your variable usage, separating branches, or if all else fails, going BASM… but that is food for future posts!

Wouldn’t defining index as const improve the situation, as there will be no need of a local copy of the value?

Best Regards

Exactly what was the point of this article ? You claim “Yes, those are the are the begin and end lines taking up more than 70% of the CPU time spent inside GetItem”, but exactly what do you want us to do about it?

You have writen an article with no points at all …

“Here is a problem” … Fine and how to deal with it ?

Marco: const can improve the situation at the call point on types such as strings, records or interfaces, but for integers, it does nothing. The copy a shuffling isn’t related to the index parameter but to the interface result.

Jens: knowing what NOT to optimize is just as important as knowing what to optimize, that’s what all the premature optimization thing is actually about: applying efforts where and only if it will matter.

The whole point here is that with an instrumenting profiler and information at the procedure level (and likely inflated by an heisenbug effect), you would have been left trying to optimize the code that’s in the procedure, code which isn’t the source of the slowdown, thus wasting your time.

As for the solution in this particular case, it’s about taking care of the calls into that GetItem rather than the GetItem itself. Other begin-end bottleneck situations can have different solutions, which will be discussed later on 🙂